- The AI Verdict

- Posts

- The AI Verdict [1/27]

The AI Verdict [1/27]

The latest AI developments affecting legal professionals

The past year saw increased global government scrutiny of AI technologies with proposed laws and regulations maturing, with the EU's Artificial Intelligence Act expected to set a precedent for future risk-based regulatory approaches.

In 2023, the National Institute of Standards and Technology (NIST) will release its AI Risk Management Framework 1.0 and the EU lawmakers anticipate that the European Parliament will vote on the proposed text for the AI Act. These regulations aim to address the increasing complexity in regulating the field of AI systems and tools.

In October 2022, the White House Office of Science and Technology Policy released the "Blueprint for an AI Bill of Rights" which guides the use of AI in order to protect the American public and minimize potential harms from AI. It includes five non-binding principles.

The FTC announced a proposal in August 2022 to seek public comment on data privacy and security practices that harm consumers with a focus on new rules for companies collecting, using and monetizing consumer data. The proposal includes consideration of algorithmic decision-making and discrimination.

Several laws and bills have been introduced in 2022-2023 to address various aspects of AI such as risk management, integration, bias assessment, regulation of digital platforms, data privacy and addressing health disparities caused by AI.

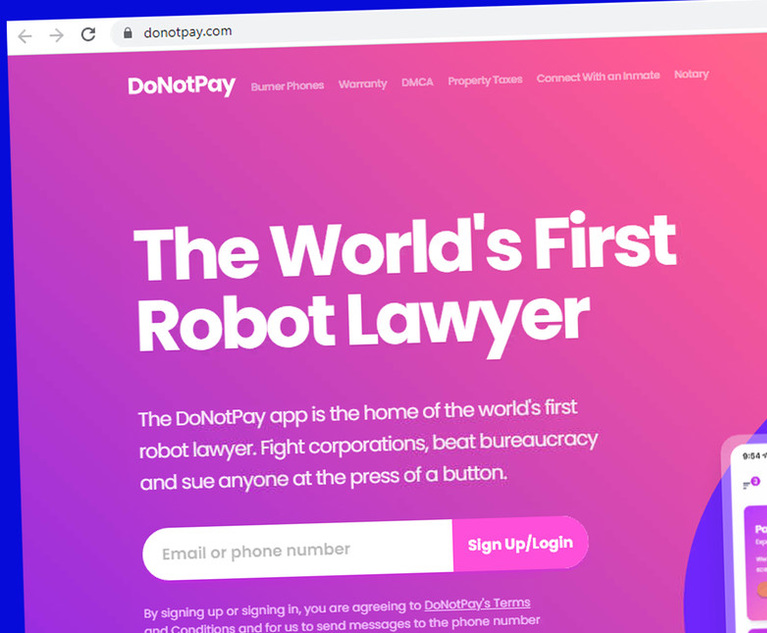

DoNotPay is a company founded by Joshua Browder that developed a chatbot, powered by the GPT-3.5 model, that can assist individuals with legal issues, such as contesting parking tickets.

In December 2022, CEO Joshua Browder announced plans to have the chatbot represent a defendant in a New York traffic court case over a speeding ticket.

He also offered $1 million for any lawyer or person with an upcoming case before the Supreme Court of the United States (SCOTUS) to let DoNotPay handle the arguments via AirPods connected to its robot lawyer.

However, these announcements drew attention from prosecutors and legal experts who raised questions about the legality of using the chatbot in a court of law, and threatened to send the CEO to jail if he followed through.

In response, DoNotPay announced that it would discontinue its "non-consumer legal rights products" and focus on developing products to help individuals with everyday tasks like lowering bills and canceling subscriptions.

Two lawsuits have been filed against AI image generators alleging that they are infringing on the copyrights of the artists behind the images they use.

Getty Images has initiated legal proceedings against Stability AI, the maker of Stable Diffusion, claiming that the company has copied millions of its images and ignored licensing options.

A class action lawsuit was filed in a U.S. federal court in San Francisco by three visual artists on behalf of the visual arts community, who say that AI image generators "violate the rights of millions of artists."

Both lawsuits argue that by scraping the web for images, AI image generators unjustly rob artists, using their work without crediting or rewarding them.

The Civil Rights Act of 1964 underlies the modern conception of illegal bias, but as AI systems automate more analyses, it is becoming harder for decisions to be transparent and for decision-makers to be held accountable.

Some developers are improving AI systems' technical transparency, but this is not universal and does not necessarily explain the processes behind the systems' outputs to the public or courts.

The problem of AI discrimination is compounded by the fact that decision-making supply chains involve more actors and regulators treat the most technical actors differently from consumer-facing service providers.

New York City, California, Connecticut and D.C. Council are fighting back by passing new regulations or investigating AI discrimination in different areas.

Organizations and governments can mitigate these problems by clarifying how existing anti-discrimination laws apply to AI systems, applying current anti-discrimination standards to AI decision-making systems, and testing algorithmic systems for bias early and often.

Chinese courts are now using AI to assist with legal decision making, thanks to programs like "Little Wisdom" (Xiao Zhi 3.0)

This AI assistant can handle repetitive tasks like announcing court procedures, and can now also analyze case materials, record testimony, and verify information from databases in real-time.

The use of AI in legal decision making has helped settle disputes more quickly, like a case involving 10 people who failed to repay bank loans, which was settled in one hearing in just 30 minutes.

AI is being used to suggest penalties in criminal cases as well, using big data analysis and previous similar cases to inform decisions.

However, there are ethical concerns with using AI in legal decision making, as it may lead to a loss of accountability and may be subject to bias.

ChatGPT, a free chatbot from OpenAI, recently took the multiple choice portion of the bar exam and did not pass, but performed better than expected in some areas like evidence and torts.

Law professors are both excited and concerned about the chatbot. Some see it as a tool for legal education while others are worried about students passing off work generated by the chatbot as their own.

ChatGPT is not sophisticated enough to earn a student an "A" without additional work, and there are other law-focused AI tools that are better suited for specific tasks.

Some skeptics are concerned about the future implications of AI in the legal field, and are urging professors to rethink take-home exams.

Law professors may soon start asking students to disclose what specific technology tools they used in their work, and to prevent academic dishonesty using AI-generated text.

/https%3A%2F%2Ftf-cmsv2-smithsonianmag-media.s3.amazonaws.com%2Ffiler_public%2F52%2Fe7%2F52e76a3c-4e16-430f-b5d6-b097c3450b65%2Fgettyimages-1243945263.jpg)

/cloudfront-us-east-2.images.arcpublishing.com/reuters/UOE5VSHPPFKQ5LY7U76THUG6FM.jpg)